Rivian to adopt Tesla's charging standard in EVs and chargers

By Abhirup Roy SAN FRANCISCO (Reuters) -Electric vehicle maker Rivian said on Tuesday it will adopt Tesla's charging standard, giving

2023-06-21 10:47

Paytm Leads $6 Billion Stock Rally as India Startups Seek Redemption

India’s dream of developing a market for consumer-focused technology startups has gotten back on track as digital payments

2023-06-21 09:19

Raytheon gets $1.15 billion missile contract from US Air Force

Raytheon Technologies said on Tuesday it has received a $1.15 billion contract from the U.S. Air Force for

2023-06-21 06:49

Exclusive-Tesla standard: BTC Power joins move to add to EV chargers

By Abhirup Roy SAN FRANCISCO BTC Power will add Tesla's standard to its electric vehicle chargers next year,

2023-06-21 03:59

Cisco launches new AI networking chips to compete with Broadcom, Marvell

Cisco Systems on Tuesday launched networking chips for AI supercomputers that would compete with offerings from Broadcom and

2023-06-21 01:59

If you have blue eyes you may have a higher risk of alcoholism

Research from the University of Vermont suggests that there may be a link between those who have blue eyes and alcoholism. The study, conducted in 2015, was led by Dr Arvis Sulovari and assistant professor Dawei Li, and was the first to draw a direct connection between the colour of someone's eyes and their risk of developing alcoholism. Professor Li generated a database comprising of more than 10,000 individuals who have received a diagnosis for at least one psychiatric illness, including conditions such as addiction. Speaking of the conditions, Li - an expert in microbiology and molecular genetics - explained that they were "complex disorders" and that "many genes" and "environmental triggers" were involved. Sign up to our free Indy100 weekly newsletter The researchers used the database to identify those with a dependency on alcohol and discovered an interesting correlation. They found that those with lighter colour eyes - especially blue - had greater rates of alcohol addiction. The researchers even checked three times to be sure of their findings. "This suggests an intriguing possibility that eye colour can be useful in the clinic for alcohol dependence diagnosis," said Dr Sulovari. The study also found that the genetic components that determine eye colour and those associated with excessive alcohol use share the same chromosome. However, more tests and studies are going to have to take place in order for us to gain a deeper understanding of the potential link between eye colour and higher rates of alcohol dependency. Researchers are still unsure as to why there is such a link. With professor Li saying that much of genetics is "still unknown". Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-06-20 23:29

YouTube removed video of Robert F. Kennedy, Jr. for violating vaccine misinformation policy

YouTube said on Monday that it had removed a video of presidential hopeful Robert F. Kennedy, Jr. being interviewed by podcast host Jordan Peterson for violating its policy prohibiting vaccine misinformation.

2023-06-20 22:28

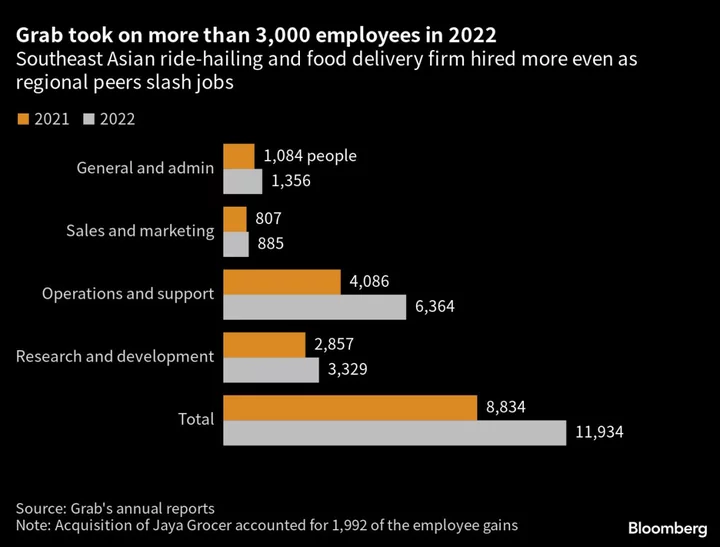

Singapore’s Grab Plans Biggest Job-Cut Round Since Pandemic

Grab Holdings Ltd. is preparing its biggest round of layoffs since the pandemic, as the internet company faces

2023-06-20 16:53

Scientists found the oldest water on the planet and drank it

If you found water that was more than two billion years old, would your first instinct be to drink it? One scientist did exactly that after finding the oldest water ever discovered on the planet. A team from the University of Toronto, led by Professor Barbara Sherwood Lollar, came across an incredible find while studying a Canadian mine in 2016. Tests showed that the water source they unearthed was between 1.5 billion and 2.64 billion years old. Given that it was completely isolated, it marked the oldest ever found on Earth. Sign up to our free Indy100 weekly newsletter Remarkably, the tests also uncovered that there was once life present in the water. Speaking to BBC News, professor Sherwood Lollar said: “When people think about this water they assume it must be some tiny amount of water trapped within the rock. “But in fact it’s very much bubbling right up out at you. These things are flowing at rates of litres per minute – the volume of the water is much larger than anyone anticipated.” Discussing the presence of life in the water, Sherwood Lollar added: “By looking at the sulphate in the water, we were able to see a fingerprint that’s indicative of the presence of life. And we were able to indicate that the signal we are seeing in the fluids has to have been produced by microbiology - and most importantly has to have been produced over a very long time scale. “The microbes that produced this signature couldn’t have done it overnight. This has to be an indication that organisms have been present in these fluids on a geological timescale.” The professor also revealed that she tried the water for herself – but how did it taste? “If you’re a geologist who works with rocks, you’ve probably licked a lot of rocks,” Sherwood Lollar told CNN. She revealed that the water was "very salty and bitter" and "much saltier than seawater." Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-06-20 14:56

Billionaire Infosys Chair Gives Alma Mater $38.5 Million for AI

Billionaire Infosys Ltd. co-founder Nandan Nilekani will donate $38.5 million to his alma mater Indian Institute of Technology

2023-06-20 14:24

Scientists warn of threat to internet from AI-trained AIs

Future generations of artificial intelligence chatbots trained using data from other AIs could lead to a downward spiral of gibberish on the internet, a new study has found. Large language models (LLMs) such as ChatGPT have taken off on the internet, with many users adopting the technology to produce a whole new ecosystem of AI-generated texts and images. But using the output data from such AI systems to further train subsequent generations of AI models could result in “irreversible defects” and junk content, according to a new, yet-to-be peer-reviewed study. AI models like ChatGPT are trained using vast amounts of data pulled across internet platforms that have mostly remained human generated until now. But AI-generated data using such models have a growing presence on the internet. Researchers, including those from the University of Oxford in the UK, attempted to understand what happened when several subsequent generations of AIs are trained off each other. They found the widespread use of LLMs to publish content on the internet on a large scale “will pollute the collection of data to train them” and lead to “model collapse”. “We discover that learning from data produced by other models causes model collapse – a degenerative process whereby, over time, models forget the true underlying data distribution,” scientists wrote in the study, posted as a preprint in arXiv. The new findings suggested there to be a “first mover advantage” when it comes to training LLMs. Scientists liken this change to what happens when AI models are trained on music created by human composers and played by human musicians. The subsequent AI output then trains other models, leading to a diminishing quality of music. With subsequent generations of AI models likely to encounter poorer quality data at their source, they may start misinterpreting information by inserting false information in a process scientists call “data poisoning”. They warned that the scale at which data poisoning can happen drastically changes after the advent of LLMs. Just a few iterations of data can lead to major degradation, even when the original data is preserved, scientists said. And over time, this could lead to mistakes compounding and forcing models that learn from generated data to misunderstand reality. “This in turn causes the model to misperceive the underlying learning task,” researchers said. Scientists cautioned that steps must be taken to label AI-generated content from human-generated ones, along with efforts to preserve original human-made data for future AI training. “To make sure that learning is sustained over a long time period, one needs to make sure that access to the original data source is preserved and that additional data not generated by LLMs remain available over time,” they wrote in the study. “Otherwise, it may become increasingly difficult to train newer versions of LLMs without access to data that was crawled from the Internet prior to the mass adoption of the technology, or direct access to data generated by humans at scale.” Read More ChatGPT ‘grandma exploit’ gives users free keys for Windows 11 Protect personal data when introducing AI, privacy watchdog warns businesses How Europe is leading the world in the push to regulate AI ‘Miracle material’ solar panels to finally enter production Meta reveals new AI that is too powerful to release Reddit user’s protests against the site’s rules have taken an even more bizarre turn

2023-06-20 13:52

Meta reveals new ‘Voicebox’ AI that is too risky to release

Meta has created a new system that it says can generate convincing speech in a variety of styles – but will not release it for fear of the risks. The new tool is called “Voicebox” and can be set to create outputs in different styles, new voices from scratch as well as with a sample. It makes speech across six languages, as well as a variety of other tools such as noise removal. It says that it is a major development on previous speech systems that required specific training for each task. Instead, Voicebox can just be given raw audio and a transcription, and then be used to modify an audio sample. It is far more effective than its competitors, Meta claimed in its announcement. It can generate words with a 5.9 per cent error rate compared to 1.9 per cent from competitor Vall-E, for instance, and do so as much as 20 times more quickly. Meta said that it had been built on the foundation of a new model it called “Flow Matching”. That allows the system to learn from speech that has not been carefully labelled, so that it can be trained on more and more diverse data. Voicebox was trained on 50,000 hours of speech and transcripts that came from public domain audiobooks in English, French, Spanish, German, Polish, and Portuguese, Meta said. Now that it has been trained, it can be given an audio recording and fill in the speech from the context, Meta said. That could be used to create a realistic sounding voice from just two seconds of speech, for instance, potentially being used to bring voices to people who cannot speak or to add people’s voices into games. It could also be used to translate a passage of speech from one lanagueg to another in a way that keeps the style, Meta said, allowing people to talk to each other authentically even if they don’t speak the same language. It could also be useful in more technical scenarios, such as audio editing, where it can be used to replace words that were not properly recorded, for instance. But Meta said that the risks were such that it would not be releasing the model. It did not point to specific harms, but said that “as with other powerful new AI innovations, we recognize that this technology brings the potential for misuse and unintended harm”. Numerous reports have warned that such systems could be used to copy people’s voices without their consent and in ways that could be harmful, such as creating fake videos of news events or using people’s voices to pose as them during scam calls, for instance. “There are many exciting use cases for generative speech models, but because of the potential risks of misuse, we are not making the Voicebox model or code publicly available at this time,” Meta said in a statement. “While we believe it is important to be open with the AI community and to share our research to advance the state of the art in AI, it’s also necessary to strike the right balance between openness with responsibility.” It also pointed to a separate paper, published on its website, in which it detailed how it had built a “highly effective” system that can distinguish between authentic speech and audio that had been generated with Voicebox. Read More Mark Zuckerberg reveals what he thinks about Apple’s headset – and it’s not good Meta scrambles to fix Instagram algorithm connecting ‘vast paedophile network’ Reddit user’s protests against the site’s rules have taken an even more bizarre turn

2023-06-20 01:59