New wearable listens to belly gurgling and other bodily noises to monitor health

New technology allows doctors to listen to the gurgle of people’s digestion and other noises to monitor their health. Doctors use sound inside their patients’ bodies to gather a host of information, listening to the air as it moves through their lungs or the beats of their heart, as well as the processing of food. They can provide important ways to understand people’s health – and noticing when they change or stop could be life-saving. But there is no easy way for doctors to monitor those things continually, or from a distance. Now a new breakthrough wearable allows doctors to continuously track those sounds by sticking technology to people’s skin. The soft, small wearables can be attached on almost any part of the body, in multiple locations, and will track the sounds without wires. Researchers have already used the device on 15 premature babies, as well as 55 adults, monitoring people with a variety of different conditions such as respiratory diseases. They found that the devices performed with clinical-grade accuracy – but also that they provided entirely new ways of caring for people. “Currently, there are no existing methods for continuously monitoring and spatially mapping body sounds at home or in hospital settings,” said Northwestern’s John A Rogers, a bioelectronics pioneer who led the device development. “Physicians have to put a conventional, or a digital, stethoscope on different parts of the chest and back to listen to the lungs in a point-by-point fashion. In close collaborations with our clinical teams, we set out to develop a new strategy for monitoring patients in real-time on a continuous basis and without encumbrances associated with rigid, wired, bulky technology.” One of the important breakthroughs in the device is that it can be used at various places at once – with researchers likening it to having a collection of doctors all listening at once. “The idea behind these devices is to provide highly accurate, continuous evaluation of patient health and then make clinical decisions in the clinics or when patients are admitted to the hospital or attached to ventilators,”said Dr Ankit Bharat, a thoracic surgeon at Northwestern Medicine, who led the clinical research in the adult subjects, in a statement. “A key advantage of this device is to be able to simultaneously listen and compare different regions of the lungs. Simply put, it’s like up to 13 highly trained doctors listening to different regions of the lungs simultaneously with their stethoscopes, and their minds are synced to create a continuous and a dynamic assessment of the lung health that is translated into a movie on a real-life computer screen.” The work is described in a new paper, ‘Wireless broadband acousto-mechanical sensing system for continuous physiological monitoring’, published in Nature Medicine. Read More SpaceX is launching the world’s biggest rocket – follow live Instagram users warned about new setting that could accidentally expose secrets SpaceX to launch world’s biggest rocket again after first attempt ended in explosion

2023-11-17 04:50

YouTube reveals AI music experiments that allow people to make music in other people’s voices and by humming

YouTube has revealed a host of new, musical artificial intelligence experiments. The features let people create musical texts by just writing a short piece of text, instantly and automatically generating music in the style of a number of artists. Users can also hum a simple song into their computer and have it turned into a detailed and rich piece of music. The new experiments are YouTube’s latest attempt to deal with the possibilities and dangers of AI and music. Numerous companies and artists have voiced fears that artificial intelligence could make it easier to infringe on copyright or produce real-sounding fake songs. One of the new features is called “Dream Track”, and some creators already have it, with the aim of using it to soundtrack YouTube Shorts. It is intended to quickly produce songs in people’s style. Users can choose a song in the style of a number of officially-licensed artists: Alec Benjamin, Charlie Puth, Charli XCX, Demi Lovato, John Legend, Papoose, Sia, T-Pain, and Troye Sivan. They can then ask for a particular song, deciding on the tone or themes of the song, and it can then be used in their post on Shorts. Another is called Music AI Tools, and is aimed to help musicians with their creative process. It came out of YouTube’s Music AI Incubator, a working group of artists, songwriters and producers who are experimenting with the use of artificial intelligence in music. “It was clear early on that this initial group of participants were intensely curious about AI tools that could push the limits of what they thought possible. They also sought out tools that could bolster their creative process,” YouTube said in an announcement. “As a result, those early sessions led us to iterate on a set of music AI tools that experiment with those concepts. Imagine being able to more seamlessly turn one’s thoughts and ideas into music; like creating a new guitar riff just by humming it or taking a pop track you are working on and giving it a reggaeton feel. “We’re developing prospective tools that could bring these possibilities to life and Music AI Incubator participants will be able to test them out later this year.” The company gave an example of one of those tools, where a producer was able to hum a tune and then have it turned into a track that sounded as if it had been professionally recorded. The tools are built on Google Deepmind’s Lyria system. The company said that was built specifically for music, overcoming problems such as AI’s difficulties with producing long sequences of sound that keep their continuity and do not break apart. At the same time, Deepmind said it had been working on a technology called SynthID to combine it with Lyria. That will put an audio watermark into the sound, which humans cannot hear but which can be recognised by tools so that they know the songs have been automatically generated. “This novel method is unlike anything that exists today, especially in the context of audio,” Deepmind said,. “The watermark is designed to maintain detectability even when the audio content undergoes many common modifications such as noise additions, MP3 compression, or speeding up and slowing down the track. SynthID can also detect the presence of a watermark throughout a track to help determine if parts of a song were generated by Lyria.” The announcement comes just days after YouTube announced restrictions on unauthorised AI clones of musicians. Earlier this week it said that users would have to tag AI-generated content that looked realistic, and music that “mimics an artist’s unique singing or rapping voice” will be banned entirely. Those videos have proven popular in recent months, largely thanks to online tools that allow people to easily combine a voice with an existing song and create something entirely new, such as Homer Simpson singing popular hits. Those will not be affected straight away, with the new requirements rolling out next year. Read More AI-generated faces are starting to look more real than actual ones Elon Musk unveils new sarcasm-loving AI chatbot for premium X subscribers New tech listens to your belly gurgling and monitors how well you are New tech listens to your belly gurgling and monitors how well you are SpaceX is launching the world’s biggest rocket – follow live Instagram users warned about new setting that could accidentally expose secrets

2023-11-17 04:48

GM's Cruise cancels its employee equity program in Q4

SAN FRANCISCO General Motors' self-driving technology unit Cruise has canceled its program that allows employees to cash out

2023-11-17 02:22

Amazon Shoppers Can Buy a Hyundai Online Starting Next Year

Amazon.com Inc. customers will be able to buy Hyundai Motor Co. vehicles on the e-commerce giant’s website starting

2023-11-17 02:16

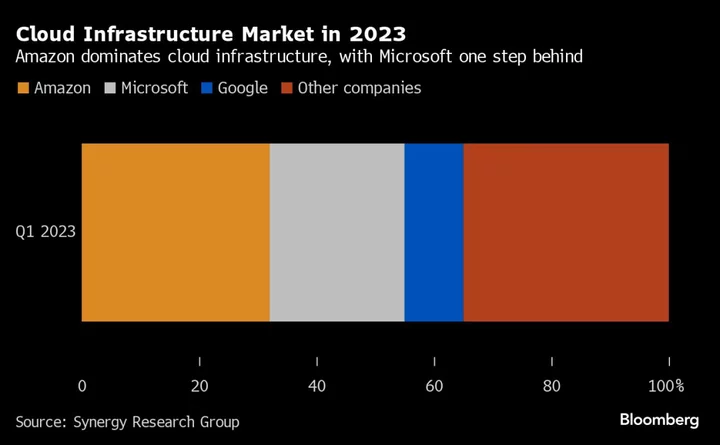

How Amazon Is Going After Microsoft's Cloud Computing Ambitions

Amazon is the driving force behind a trio of advocacy groups working to thwart Microsoft's growing ambition to

2023-11-17 01:23

HelloFresh Falls Most Since IPO After Warning on Profit

HelloFresh SE fell a record 25% on Thursday, erasing €886 million ($964 million) of market value, after the

2023-11-17 00:28

Fed Latest: Barr Issues Warning on Hedge Funds’ Basis Trades

The Federal Reserve’s top banking regulator on Thursday joined a chorus of US officials expressing concern about highly

2023-11-17 00:27

Biggest-ever simulation of the universe could finally explain how we got here

It’s one of the biggest questions humans have asked themselves since the dawn of time, but we might be closer than ever to understanding how the universe developed the way it did and we all came to be here. Computer simulations are happening all the time in the modern world, but a new study is attempting to simulate the entire universe in an effort to understand conditions in the far reaches of the past. Full-hydro Large-scale structure simulations with All-sky Mapping for the Interpretation of Next Generation Observations (or FLAMINGO for short), are being run out of the UK. The simulations are taking place at the DiRAC facility and they’re being launched with the ultimate aim of tracking how everything evolved to the stage they’re at now within the universe. The sheer scale of it is almost impossible to grasp, but the biggest of the simulations features a staggering 300 billion particles and has the mass of a small galaxy. One of the most significant parts of the research comes in the third and final paper showcasing the research and focuses on a factor known as sigma 8 tension. This tension is based on calculations of the cosmic microwave background, which is the microwave radiation that came just after the Big Bang. Out of their research, the experts involved have learned that normal matter and neutrinos are both required when it comes to predicting things accurately through the simulations. "Although the dark matter dominates gravity, the contribution of ordinary matter can no longer be neglected, since that contribution could be similar to the deviations between the models and the observations,” research leader and astronomer Joop Schaye of Leiden University said. Simulations that include normal matter as well as dark matter are far more complex, given how complicated dark matter’s interactions with the universe are. Despite this, scientists have already begun to analyse the very formations of the universe across dark matter, normal matter and neutrinos. "The effect of galactic winds was calibrated using machine learning, by comparing the predictions of lots of different simulations of relatively small volumes with the observed masses of galaxies and the distribution of gas in clusters of galaxies," said astronomer Roi Kugel of Leiden University. The research for the three papers, published in the Monthly Notices of the Royal Astronomical Society, was undertaken partly thanks to a new code, as astronomer Matthieu Schaller of Leiden University explains. "To make this simulation possible, we developed a new code, SWIFT, which efficiently distributes the computational work over 30 thousand CPUs.” Sign up for our free Indy100 weekly newsletter How to join the indy100's free WhatsApp channel Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings

2023-11-16 23:49

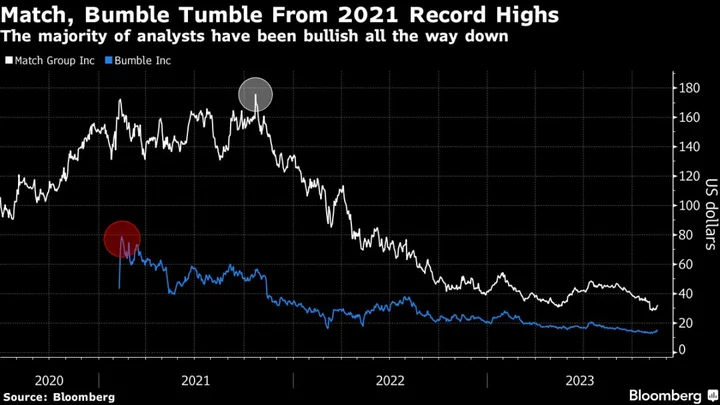

Match and Bumble’s 80% Stock Plunges Test Analyst Commitment

Online dating stocks have mostly been ghosted by this year’s scorching technology rally. Many on Wall Street continue

2023-11-16 23:22

Ramaswamy’s Crypto Policy Calls for Deregulation and Gutting the SEC

Vivek Ramaswamy vows to rescind most federal cryptocurrency regulations and drastically reduce headcount at the Securities and Exchange

2023-11-16 22:53

BlackRock woos investors for ethereum trust to further crypto push

Asset management giant BlackRock on Thursday began courting public investors for an ethereum trust, doubling down on its

2023-11-16 21:28

Water discovered to be leaking from Earth's crust into the planet's core

There is much we still don’t know about the inside of our planet – but scientists recently discovered water is slowly leaking down there from the surface. It’s not a simple journey. The liquid is dripping down descending tectonic plates, before eventually reaching the core after a 2,900 kilometre journey. And while the process is slow, it has over billions of years formed a new surface between the molten metal of the outer core and the outer mantle of the Earth. In a new study, scientists at Arizona State University have said the water is triggering a chemical reaction, creating the new layer, which is “few hundred kilometres thick”. (That’s “thin” when it comes to the inner layers of the Earth.) “For years, it has been believed that material exchange between Earth's core and mantle is small. Yet, our recent high-pressure experiments reveal a different story. “We found that when water reaches the core-mantle boundary, it reacts with silicon in the core, forming silica," co-author Dr Dan Shim wrote. “This discovery, along with our previous observation of diamonds forming from water reacting with carbon in iron liquid under extreme pressure, points to a far more dynamic core-mantle interaction, suggesting substantial material exchange.” So what does it mean for all of us up on the surface? The ASU release said: “This finding advances our understanding of Earth's internal processes, suggesting a more extensive global water cycle than previously recognised. “The altered ‘film’ of the core has profound implications for the geochemical cycles that connect the surface-water cycle with the deep metallic core.” How to join the indy100's free WhatsApp channel Sign up to our free indy100 weekly newsletter Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-11-16 21:19