The Scientific Reason You Should Microwave Popcorn With “This Side Up”

Microwave popcorn bags are often covered in words—here’s why you should pay attention to “This Side Up.”

2023-07-05 03:20

Time ran five times slower in the early universe, new study finds

New findings have revealed that time ran five times slower in the early universe, after scientists published new research into quasars. A quasar is a luminous active galactic nucleus and studying them has allowed scientists to measure time. The variation in brightness of quasars from the early universe has been measured to determine time dilation back to a billion years after the Big Bang. Experts have found that there was an era in which clocks moved five times slower than they do in the present day. The findings come as a relief to many cosmologists who have been perplexed by previous results that have come from studying quasars. The discovery that the universe is expanding led to the theorisation of “time dilation” – the idea that time moved slower the smaller the universe was. Sign up to our free Indy100 weekly newsletter Professor Geraint Lewis of the University of Sydney, the lead author of a new study, said in a statement: “Looking back to a time when the universe was just over a billion years old, we see time appearing to flow five times slower.” He continued, explaining: “If you were there, in this infant universe, one second would seem like one second – but from our position, more than 12 billion years into the future, that early time appears to drag.” To measure the extent of time dilation, scientists turned to quasars, as they are able to measure their change in brightness over a period they can estimate. The most distant quasar that is visible is 13 billion back in time and can still be seen despite its far distance. Their brightness varies due to turbulence and lumpiness in their accretion disks. Lewis explained the phenomenon as being “a bit like the stock market”. He said: “Over the last couple of decades, we’ve seen there is a statistical pattern to the variation, with timescales related to how bright a quasar is and the wavelength of its light.” Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-07-04 23:58

Scientists discover secret planet hiding in our solar system

There are eight planets in our solar system – plus poor old Pluto, which was demoted in 2006 – but what if there were more? Turns out that might be the case. Astronomers have calculated there is a 7 per cent chance that Earth has another neighbour hiding in the Oort cloud, a spherical region of ice chunks and rocks that is tens of thousands of times farther from the sun than we are. “It’s completely plausible for our solar system to have captured such an Oort cloud planet,” said Nathan Kaib, a co-author on the work and an astronomer at the Planetary Science Institute. Sign up to our free Indy100 weekly newsletter Hidden worlds like this are “a class of planets that should definitely exist but have received relatively little attention” until now, he said.. If a planet is hiding in the Oort cloud, it’s almost certainly an ice giant. Large planets like Jupiter and Saturn are generally born as twins. They have huge gravitational pulls of their own, however, and sometimes destabilise one another. That could have led to a planet to be nudged out of the solar system entirely – or exiled to its outer reaches, where the Oort cloud resides. “The survivor planets have eccentric orbits, which are like the scars from their violent pasts,” said lead author Sean Raymond, researcher at the University of Bordeaux’s Astrophysics Laboratory. That means that the Oort cloud planet could have a significantly elongated orbit, unlike the near-perfect circle Earth tracks around the sun. Trouble is, when things are that far away, they’re pretty difficult to spot. “It would be extremely hard to detect,” added Raymond. “If a Neptune-sized planet existed in our own Oort cloud, there’s a good chance that we wouldn’t have found it yet,” said Malena Rice, an astronomer at MIT not involved in this work. “Amazingly, it can sometimes be easier to spot planets hundreds of light-years away than those right in our own backyard.” Time to crack out the telescope. Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-07-04 23:15

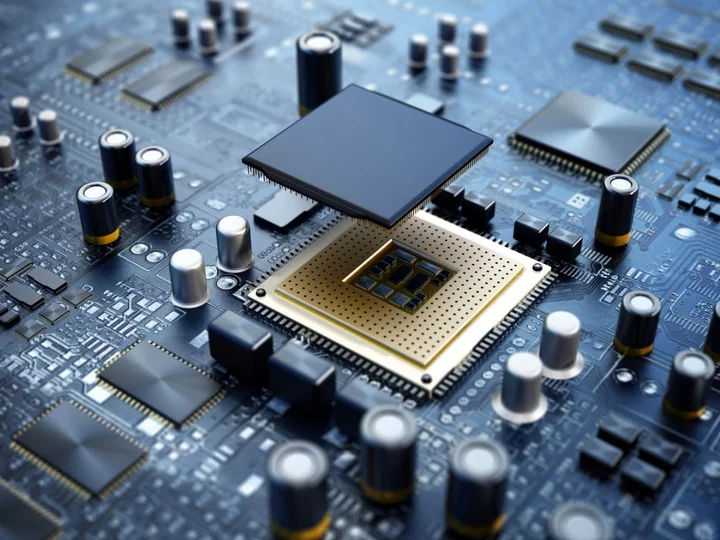

AI takes just five hours to design functional computer

Researchers in China have developed an artificial intelligence tool capable of designing a working computer in under five hours. The team of 19 computer scientists from five different institutions made the AI breakthrough after setting out to prove that machines can create computer chips in a similar way to humans. The feat was performed 1,000 times faster than a human team could have achieved it, the researchers claimed, marking a major step towards building self-evolving machines. “Design activity... distinguishes humanity from other animals and traditional machines, and endowing machines with design abilities at the human level or beyond has been a long-term pursuit,” the scientists wrote in a paper detailing their research. “We present a new AI approach to automatically design a central processing unit (CPU), the brain of a computer, and one of the world’s most intricate devices humanity has ever designed.” The project involved the layout of an industrial-scale RISC-V CPU, capable of running the Linux operating system and achieving an accuracy of 99.99 per cent in validation tests. The AI bypassed the manual programming and verification process of the typical design cycle, which the researchers said “consumes more than 60-80 per cent of the design time and resources” of human teams. The AI was also able to autonomously make discoveries involving computer design, uncovering something called the von Neumann architecture, first invented in 1945. The overall performance of the CPU is relatively modest compared to modern computers, with the researchers saying it can perform at a similar level to a 1991 Intel 80486SX CPU. Developing the AI approach, however, has the potential to “reform the semiconductor industry by significantly reducing the design cycle”, the researchers said. The research is detailed in a study, titled ‘Pushing the limits of machine design: Automated CPU design with AI’. Leading AI chip maker Nvidia has previously used artificial intelligence to optimise its computer chip designs, publishing a new approach to AI-powered chip design in March that could significantly improve the cost and performance of CPUs. Read More 10 ways AI will change the world – from curing cancer to wiping out humanity Harvard’s new computer science teacher is a chatbot Google’s DeepMind unveils AI robot that can teach itself unsupervised Robots can now learn new skills like picking up knives by watching YouTube videos

2023-07-04 22:25

Scientists discover that megaladon's went extinct because of themselves

Scientists believe they have discovered the cause of the megalodon's extinction – and no, it’s not Jason Statham. Experts have been conducting research on fossils of teeth from the biggest species of shark the world has ever seen, which went extinct around 3.6 million years ago and measured at least 15 metres long. Research published in the journal Proceedings of the National Academy of Sciences explains that the animal was actually partially warm-blooded. Unlike most cold-blood sharks, the body temperature is thought to have been around 27 degrees. The temperature is higher than the sea temperatures around the time. Sign up to our free Indy100 weekly newsletter Study co author Robert Eagle, who is professor of marine science and geobiology at UCLA, said [via CNN]: “We found that O. megalodon had body temperatures significantly elevated compared to other sharks, consistent with it having a degree of internal heat production as modern warm-blooded (endothermic) animals do.” They were able to prove that the animals were warm-blooded by analysing how carbon-13 and oxygen-18 isotopes were closely bonded together in the fossilised teeth. Senior study author Kenshu Shimada is a paleobiologist at DePaul University in Chicago, who said: “A large body promotes efficiency in prey capture with wider spatial coverage, but it requires a lot of energy to maintain. “We know that Megalodon had gigantic cutting teeth used for feeding on marine mammals, such as cetaceans and pinnipeds, based on the fossil record. The new study is consistent with the idea that the evolution of warm-bloodedness was a gateway for the gigantism in Megalodon to keep up with the high metabolic demand.” The fact it was warm-blooded means that regulating body temperature could have been the cause of its eventual demise. The Earth was cooling when the animal went extinct, which could have been a critical factor. “The fact that Megalodon disappeared suggests the likely vulnerability of being warm-blooded because warm-bloodedness requires constant food intake to sustain high metabolism,” Shimada said. “Possibly, there was a shift in the marine ecosystem due to the climatic cooling,” causing the sea level to drop, altering the habitats of the populations of the types of food megalodon fed on such as marine mammals and leading to its extinction. “One of the big implications for this work is that it highlights the vulnerability of large apex predators, such the modern great white shark, to climate change given similarities in their biology with megalodon,” said lead study author Michael Griffiths, professor of environmental science, geochemist and paleoclimatologist at William Paterson University. Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-07-04 21:54

Apple loses London appeal in 4G patent dispute with Optis

LONDON Apple Inc infringed two telecommunications patents used in devices including iPhones and iPads, London's Court of Appeal

2023-07-04 21:52

Meta loses as top EU court backs antitrust regulators over privacy breach checks

By Foo Yun Chee BRUSSELS (Reuters) -Antitrust authorities overseeing firms such as Facebook owner Meta Platforms are entitled to also

2023-07-04 19:25

Meta Loses EU Court Fight Over Antitrust Crackdown on Data

Meta Platforms Inc.’s Facebook lost its European Union court fight over a German antitrust order that homed in

2023-07-04 19:22

The world's shortest IQ test will reveal how average your intelligence is in 3 questions

IQ tests offer a formula that allows you to compare yourself to other people and see how average (or above average) your intelligence is. The Cognitive Reflection Test (CRT) is dubbed the world’s shortest IQ test because it consists of just three questions. It assesses your ability to identify that a simple problem can actually be harder than it first appears. The quicker you do this, the more intelligent you appear to be. Here are the three questions: 1. A bat and a ball cost £1.10 in total. The bat costs £1.00 more than the ball. How much does the ball cost? 2. If it takes five machines five minutes to make five widgets, how long would it take 100 machines to make 100 widgets? 3. In a lake, there is a patch of lily pads. Every day, the patch doubles in size. If it takes 48 days for the patch to cover the entire lake, how long would it take for the patch to cover half of the lake? Sign up to our free Indy100 weekly newsletter Here is what a lot of people guess: 1. 10 pence 2. 100 minutes 3. 24 days These answers would be wrong. When you're ready, scroll down for the correct answers, and how you get to them: 1. The ball would actually cost 5 pence or 0.05 pounds If the ball costs X, and the bat costs £1 more, then it will be: X+£1 Therefore Bat+ball=X + (X+1) =1.1 Thus 2X+1=1.1, and 2X=0.1 X= 0.05 2. It would take 5 minutes to make 100 widgets. Five machines can make five widgets in five minutes; therefore one machine will make one widget in five minutes too. Therefore if we have 100 machines all making widgets, they can make 100 widgets in five minutes. 3. It would take 47 days for the patch to cover half of the lake If the patch doubles in size each day going forward, it would halve in size going backwards. So on day 47, the lake is half full. In a survey of almost 3,500 people, 33 per cent got all three wrong, and 83 per cent missed at least one. While this IQ test has its shortcomings – its brevity, and lack of variation in verbal and non-verbal reasoning - only 48 per cent of MIT students sampled were able to answer all three correctly. Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-07-04 18:21

Why isn’t Twitter working? How Elon Musk finally broke his site – and why the internet might be about to get worse

It started like any other outage: unexplained error messages that told users they had hit their “rate limit”, and Twitter posts refusing to load. But as the weekend progressed, it became clear that these weren’t just any old technical problems, but rather issues that could define the future not only of Twitter but of the internet. Elon Musk took to Twitter on Saturday and announced that he would be introducing a range of changes “to address extreme levels of data scraping [and] system manipulation”. Users would only be able to see a limited number of posts, and those who are not logged in wouldn’t be able to see the site at all. That decision triggered those error messages, since users were hitting the “rate limit” that meant they were requesting too many posts for Twitter to be able to handle. The new limits – apparently temporary, though still in effect – meant that users were being rationed on how many tweets they were able to see, and would see frustrating and unexplained messages when they actually hit that limit. In many ways it was yet another perplexing and worrying decision by Mr Musk, whose stewardship of Twitter has lurched from scandal to scandal since he took over the company in October last year. (He appointed a chief executive, Linda Yaccarino, last month, but is still seemingly deciding, executing and communicating the company’s strategy.) But something seems different about the chaos this time around. For one, it is not one of the many content policy issues or potentially hostile ways of encouraging people to sign up for Twitter’s premium service that have marked Mr Musk’s leadership of Twitter so far; for another, it seemed to be part of a broader issue that is rattling the whole internet, and which Twitter might only be one symptom. It remains unclear whether Mr Musk’s latest decision really has anything to do with scraping by artificial intelligence systems, as he claimed. But the explanation certainly makes sense: AI systems require vast corpuses of text and images to be trained on, and the companies that make them have generated that by scraping and regurgitating the text that can be easily found across the web. Every time someone wants to load a web page, their computer makes a request to that company’s servers, which then provide the data that can be reconstructed on the user’s web browser. If you want to load Elon Musk’s Twitter account, for instance, you direct your browser to the relevant address and it will show his Twitter posts, pulled down from the internet. That comes with costs, of course, including the price of running those servers and the bandwidth required to be sending vast amounts of data quickly across the internet. For the most part on the modern internet, that cost has been covered by also sending along some advertising, or requiring that people sign up for a subscription to see the content they are asking for. AI companies that are scraping those sites make frequent requests for that data, however, and quickly. And since the system is automated, they are not able to look at ads or pay for subscriptions, meaning that companies are not paid for the content they are providing. That issue looks to be growing across the internet. Companies that host text discussions, such as Twitter, are very aware that they might be serving up the same data that could one day render them obsolete, and are keen to at least make some money from that process. It also looks to be some of the reason behind the recent fallout on Reddit, too. That site is especially useful for feeding to an AI – it includes very human and very helpful answers to the kinds of questions that users might ask an AI system – and the company is very aware that it is, once again, giving up the information that might also be used to overtake it. To try and solve that, it recently announced that it would be charging large amounts of access to its API, which serves as the interface through which automated systems can hoover up that data. It was at least partly intended as a way to generate money from those AI companies, though it also had the effect of making it too expensive for third-party Reddit clients – which also rely on that API – to keep running, and the most popular ones have since shut down. There is good reason to think that this will keep happening. The web is increasingly being hoovered up by the same AI systems that will eventually be used to further degrade the experience of using it: Twitter is, in effect, being used to train the same bots that will one day post misleading and annoying messages all over Twitter. Every website that hosts text, images or video could face the same problems, as AI companies look to build up their datasets and train up their systems. As such, all of the internet could become more like Mr Musk’s Twitter did over the weekend: actively hostile to actual users, as it attempts to keep the fake users away. But just as likely is that it is Mr Musk’s explanation for why the site went down conveniently chimes with the zeitgeist, and helpfully shifts blame to the AI companies that he has already voiced significant skepticism about. The truth may be that Twitter – which has fired the vast majority of its staff, including those in its engineering teams – might finally be running into problems with infrastructure that happen when fewer people are around to keep the site online. Twitter’s former head of trust and safety, Yoel Roth, is perhaps the best qualified person to suggest that is the case. He said that Mr Musk’s argument for the new limits “doesn’t pass the sniff test” and instead suggested that it was the result of someone mistakenly breaking the rate limiter and then having that accident passed off by Mr Musk as being intentional, whether he knows that or not. “For anyone keeping track, this isn’t even the first time they’ve completely broken the site by bumbling around in the rate limiter,” Mr Roth wrote on Twitter rival Bluesky. “There’s a reason the limiter was one of the most locked down internal tools. Futzing around with rate limits is probably the easiest way to break Twitter.” Mr Roth also said that Twitter has long been aware that it was being scraped – and that it was OK with it. He called it the “open secret of Twitter data access” and said the company considered it “fine”. And he too suggested that the events of the weekend could be a hint about what is coming to the internet, offering an entirely different alternative. It’s not Twitter, Reddit and other companies who should really be upset about what is going on, he suggested. “There’s some legitimacy to Twitter and Reddit being upset with AI companies for slurping up social data gratis in order to train commercially lucrative models,” Mr Roth said. “But they should never forget that it’s not *their* data — it’s ours. A solution to parasitic AI needs to be user-centric, not profit-centric.” Read More Twitter to stop TweetDeck access for unverified users Meta’s Twitter alternative Threads to be launched this week – report Twitter rival Bluesky halts sign-ups after huge surge in demand Twitter is breaking more and more Twitter rival sees huge increase in users as Elon Musk ‘destroys his site’ What does Twitter’s rate-limiting restriction mean?

2023-07-04 15:48

Amazon, Google, Apple, Meta, Microsoft say they meet EU gatekeeper status

By Foo Yun Chee BRUSSELS Alphabet's Google, Amazon, Apple, Meta Platforms and Microsoft have notified the European Commission

2023-07-04 14:48

Veoneer Launching Sale of $500 Million Passive Safety Unit, Sources Say

The owner of automotive technology company Veoneer has kicked off a sale of its passive safety business, according

2023-07-04 13:17