Some of the most influential voices in the tech industry are set to meet with federal lawmakers Wednesday morning as the US Senate prepares to draw up legislation regulating the fast-moving artificial intelligence industry.

Among those attending the in-person event will be the CEOs of Anthropic, Google, IBM, Meta, Microsoft, Nvidia, OpenAI, Palantir and X, the company formerly known as Twitter. The guest list also includes Bill Gates, the former CEO of Microsoft, and Eric Schmidt, the former CEO of Google, along with leading officials from the entertainment industry, civil rights groups and labor organizations.

Wednesday's meeting and its expected all-star cast marks the first of nine sessions hosted by Senate Majority Leader Chuck Schumer, who has pledged to craft comprehensive guardrails regulating the AI sector in what he's described as an unprecedented congressional effort.

The push reflects policymakers' growing awareness of how artificial intelligence, and particularly the type of generative AI popularized by tools such as ChatGPT, could potentially disrupt business and everyday life in numerous ways — ranging from increasing commercial productivity to threatening jobs, national security and intellectual property.

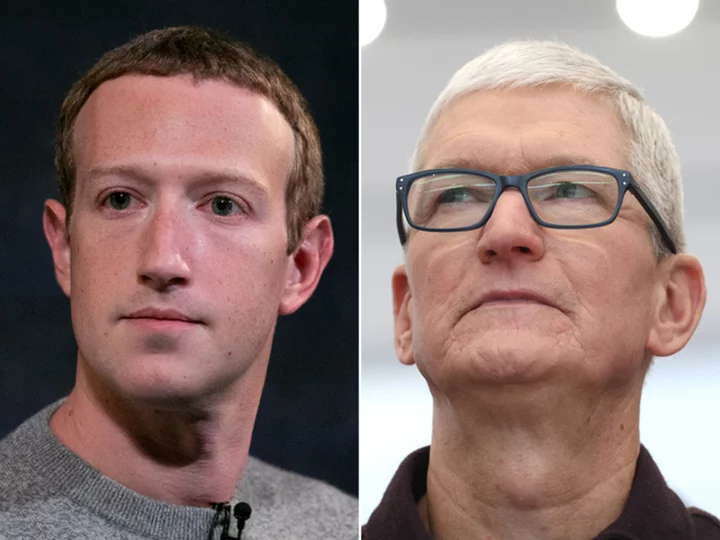

The session held at the US Capitol in Washington could give the tech industry its most significant opportunity yet to influence how lawmakers design the rules that could govern AI. Some companies, including Google, IBM, Microsoft and OpenAI, have already offered their own in-depth proposals in white papers and blog posts that describe layers of oversight, testing and transparency — though some companies differ on key questions such as whether a new federal agency is needed to regulate AI. (It will also likely be the first time that Meta CEO Mark Zuckerberg and X owner Elon Musk have shared a room since the two men began challenging each other to a cage fight months ago.)

But crucially, the event could also shed light on the political feasibility of a broad, sweeping AI law, setting expectations for what Congress may achieve.

"I think what these forums will do is give some insight into, you know, what is the range of opinion among members of Congress?" said Christopher Padilla, vice president of IBM's global government affairs team. "Is there some consensus on some basic things, like transparency, or respecting intellectual property rules, or explainability of algorithms? Is there a common denominator someplace where enough members could agree? I think we'll learn that through this process."

At the meeting, Padilla added, IBM plans to highlight how some of the company's clients are currently using its AI tools, as well as IBM's proposed vision for AI policy, which calls for applying escalating restrictions to algorithms depending on the risks their use may cause. IBM CEO Arvind Krishna will also seek to "demystify" a widely held impression that AI development is done only by a handful of companies like OpenAI or Google, Padilla said.

Call for regulation

Executives such as OpenAI CEO Sam Altman already wowed some senators by publicly calling for new rules early in the industry's lifecycle, which some lawmakers see as a welcome contrast to the social media industry that has resisted regulation.

Clement Delangue, co-founder and CEO of the AI company Hugging Face, tweeted last month that Schumer's guest list "might not be the most representative and inclusive," but that he would "try my best to share insights from a broad range of community members, especially on topics of openness, transparency, inclusiveness and distribution of power."

Civil society groups have voiced concerns about AI's possible dangers, such as the risk that poorly trained algorithms may inadvertently discriminate against minorities, or that they could ingest the copyrighted works of writers and artists without compensation or permission. Some authors have sued OpenAI over those claims, while others have asked in an open letter to be paid by AI companies. News publishers such as CNN, The New York Times and Disney are some of the content producers who have blocked ChatGPT from using their content. (OpenAI has said exemptions such as fair use apply to its training of large language models.)

"We will push hard to make sure it's a truly democratic process with full voice and transparency and accountability and balance," said Maya Wiley, president and CEO of the Leadership Conference on Civil and Human Rights, "and that we get to something that actually supports democracy; supports economic mobility; supports education; and innovates in all the best ways and ensures that this protects consumers and people at the front end — and just not try to fix it after they've been harmed."

The concerns reflect what Wiley described as "a fundamental disagreement" with tech companies extending from how social media platforms have handled mis- and disinformation, hate speech and incitement.

"They're complicated issues, but their way of how [the companies] understand and balance them, how they see cost centers in trust and safety rather than as really important investments .... we have real disagreements there," Wiley said, adding that giving underrepresented groups a seat at the table will be crucial to a successful outcome. "While we share a lot of the same principles in many instances, I think the question is, how do we find the right balance that understands there are some legitimate issues on all sides of this conversation, but that without representation, without access ... we are going to have larger societal problems."

Developing policy

Navigating those diverse interests will be Schumer, who along with three other senators — South Dakota Republican Sen. Mike Rounds, New Mexico Democratic Sen. Martin Heinrich and Indiana Republican Sen. Todd Young — is leading the Senate's approach to AI. Earlier this summer, Schumer held three informational sessions for senators to get up to speed on the technology, including one classified briefing featuring presentations by US national security officials.

Wednesday's meeting with tech executives and nonprofits marks the next stage of lawmakers' education on the issue before they get to work developing policy proposals. In announcing the series in June, Schumer emphasized the need for a careful, deliberate approach and acknowledged that "in many ways, we're starting from scratch."

Schumer's personal involvement in the effort highlights what he has described as the unique challenge that AI poses for congressional leaders, and the need for a special process.

"AI is unlike anything Congress has dealt with before," he said. "It's not like labor, or healthcare, or defense, where Congress has had a long history we can work off of. Experts aren't even sure which questions policymakers should be asking."

In a proposed framework for legislation, Schumer suggested that any laws Congress passes to regulate AI should prioritize innovation while ensuring that democracy, national security and consumers' ability to understand the technology are not compromised. A smattering of AI bills have already emerged on Capitol Hill and seek to rein in the industry in various ways, but Schumer's push represents a higher-level effort to coordinate Congress's legislative agenda on the issue.

New AI legislation could also serve as a potential backstop to voluntary commitments that some AI companies made to the Biden administration earlier this year to ensure their AI models undergo outside testing before they are released to the public.

But even as US lawmakers prepare to legislate by meeting with industry and civil society groups, they are already months if not years behind the European Union, which is expected to finalize a sweeping AI law by year's end that could ban the use of AI for predictive policing and restrict how it can be used in other contexts.